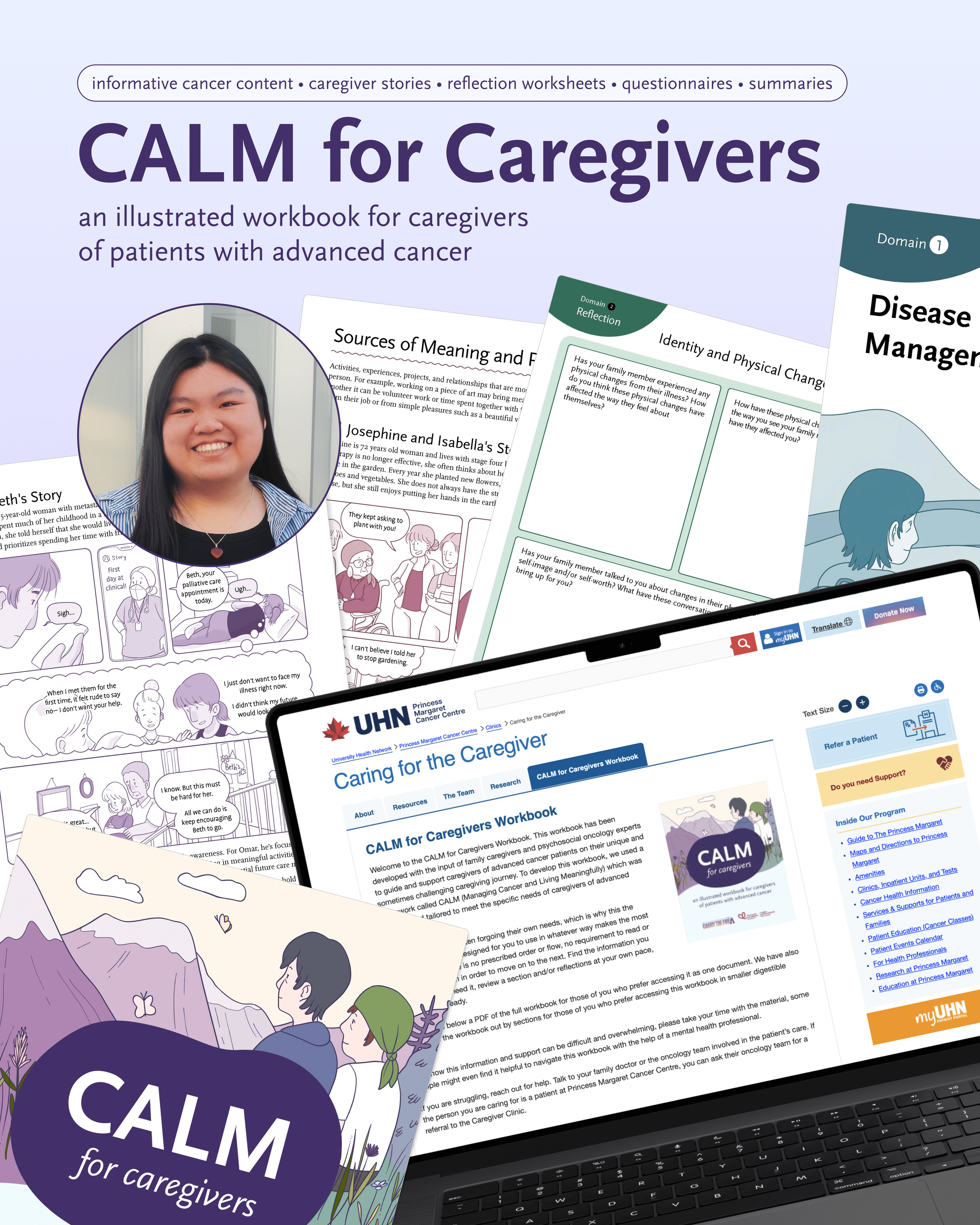

Emily Huang, MScBMC ‘25, in collaboration with the Caregiver Clinic at Princess Margaret Cancer Centre, created an illustrated workbook designed to support caregivers of patients with advanced cancer. Image provided by Emily Huang.

Caregivers often put aside their own emotional and mental health when caring for patients with advanced cancer.

To address this challenge, Emily Huang, a 2025 graduate of the Master of Science in Biomedical Communications (MScBMC) program, worked with the Caregiver Clinic at Princess Margaret Cancer Centre. Huang created an illustrated workbook designed to support caregivers of patients with advanced cancer.

The workbook, titled CALM for Caregivers: An Illustrated Workbook for Caregivers of Patients with Advanced Cancer, was developed by the Managing Cancer and Living Meaningfully (CALM) team at Princess Margaret.

"As part of my master's research project, I transformed CALM's text content into engaging illustrations and graphic narratives to resonate with a caregiver’s lived experience,” Huang says. “Through thoughtful and collaborative design, I created an intuitive workbook that adapts to a caregiver's lifestyle."

Shelley Wall, MScBMC associate director and Huang’s faculty advisor says that “digesting dozens of pages of purely textual information can be daunting, especially for caregivers who are under stress. Emily has used her gift for visual storytelling and design to translate the CALM team's document into a truly welcoming resource that's easy to navigate."

The illustrated workbook is divided into five sections. Each section contains informative cancer content, graphic narrative-based caregiver stories, reflection worksheets, questionnaires, and more. Caregivers can work through the information in any order–as and when they need it–and at their own pace.

Huang says that CALM for Caregivers is now available as a free online resource for caregivers worldwide. The workbook can be accessed on the UHN Princess Margaret Cancer Centre web site:

https://www.uhn.ca/PrincessMargaret/Clinics/Caring_for_the_Caregiver#tab5

~

Web sites referenced:

Emily Huang's online portfolio: https://www.emilyhuang.ca

UHN Caring for the Caregiver: https://www.uhn.ca/PrincessMargaret/Clinics/Caring_for_the_Caregiver#tab5

Shelley Wall’s faculty profile: https://bmc.med.utoronto.ca/faculty-staff/#wall